Health care continues to trend towards more digital platforms, and more than ever patients are turning to physician review websites (PRWs). Some evidence shows that roughly 75% of individuals are aware of PRWs sites and close to 30% of patients use them when making their care decisions.1 Patients have been shown to wait up to 6 weeks to see providers with high reviews.2 As such, it is important for providers to understand their presence on online platforms. We present a cross-sectional study of 226,229 reviews of 12,250 dermatology provider profiles posted on the most popular PRW (Healthgrades), of which, to our knowledge, is the largest data set on provider reviews in dermatology. We hope to better understand what correlates to more favorable reviews in order to improve patient experiences.

Methods

On May 3, 2018, we searched for all profiles under “dermatologist” on Healthgrades. After excluding 584 providers who were clearly not practicing dermatology, the search yielded 12,250 dermatologists.

Data from webpages were compiled using Import.io scraping software, including overall rating (“likelihood to recommend,” out of five stars), type of subspecialty, age, number of insurances accepted, procedures performed, and conditions treated. We also analyzed profiles based on wait time, gender, and if a profile included personal biography, care philosophy (CP), or malpractice history. We categorized dermatologists who graduated from top-25 medical schools as ranked by US News and World Report and top-20 dermatology residency programs as published in Cutis to be “top-tier” graduates.3 Healthgrades also scores clinicians on seven subcategories, such as trustworthiness, explains conditions well, and office environment, which were all included in our study.

After confirming that the data met all statistical assumptions, we calculated Pearson correlations for continuous variables, t tests for dichotomous variables, analysis of variance for polytomous variables, and a regression model to evaluate all variables simultaneously using SPSS (version 25).

Results

Dermatology providers were rated highly, with an average rating of 4.01 out of 5.00, and ratings varied among subspecialties

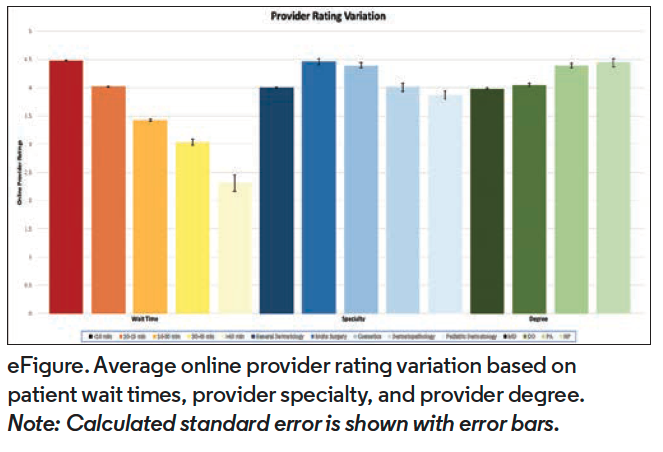

(Table 1). Age and total number of reviews negatively correlated with patient reviews, while number of insurances accepted did not meaningfully correlate (Pearson: -.186, -.255, -.042, respectively). Providers who posted more procedures and conditions treated on their profile had more ratings (.315 and .298, respectively; P<.01) and accepted more insurances (.260, .247, respectively; P<.01). Wait time negatively correlated with average review and midlevel providers (MLPs) were rated higher than both MD and DO physicians (P<.01) (eFigure).  Gender, malpractice history, graduating from a top-tier medical school or residency, and average income of the office zip code on the 2010 Census had no meaningful correlation with rating. Dermatologists who posted a CP and short biography were rated higher (P<.01), while those that posted awards or research information were rated similarly (Table 1). Patients posted a higher “likelihood to recommend” when providers were perceived as trustworthy, explained conditions well, had a positive office environment, easy scheduling, and clearly answered questions, but staff friendliness did not meaningfully correlate (Pearson: .039). All significant results were confirmed with a Bonferroni correction and remained significant (eTable 2).

Gender, malpractice history, graduating from a top-tier medical school or residency, and average income of the office zip code on the 2010 Census had no meaningful correlation with rating. Dermatologists who posted a CP and short biography were rated higher (P<.01), while those that posted awards or research information were rated similarly (Table 1). Patients posted a higher “likelihood to recommend” when providers were perceived as trustworthy, explained conditions well, had a positive office environment, easy scheduling, and clearly answered questions, but staff friendliness did not meaningfully correlate (Pearson: .039). All significant results were confirmed with a Bonferroni correction and remained significant (eTable 2).

Discussion and Conclusion

Nurse practitioners (NPs) and physician assistants (PAs) were rated higher than physicians in our dataset. Our regression showed that the majority of this difference is due to differences in wait times, with MLPs having significantly lower wait times than physicians. However, even when accounting for wait time, PAs and NPs were rated slightly higher. This finding is consistent with a study that showed randomly assigning NPs in the emergency department improved patient satisfaction, even after adjusting for differences in wait time.4 Non-physician providers generally focus on less complex pathology with better outcomes, which may contribute to why MLPs had higher ratings. MLPs in dermatology may be able to spend more time with patients, a variable our study cannot analyze. Our data suggests that including MLPs in the practice of dermatology does not negatively impact patient satisfaction and may even improve ratings.

In our analysis of profile content, we found that dermatologists who posted biographies and care philosophies have higher online ratings. This may favor younger providers who are more comfortable online and could explain why older providers had lower ratings. Not everything posted on a PRW helps, as research completed and awards earned does not correlate with higher reviews. Similarly, attending a highly ranked medical school or residency was not associated with higher ratings. While dermatologists may be interested in including academic pedigree or research accolades online, patients give higher reviews to providers that posted personal information on their profiles.

While one study has shown professional staff demeanor to positively impact patient ratings,5 we found that staff friendliness did not affect ratings. Additionally, another study suggested that providers who care for privately insured patients are ranked higher.6 We found no evidence that a clinic’s zip code affluence correlated with dermatologist ratings. As in other specialties, wait time was the greatest predictor of physician review.7 Over 20% of the variance in our multivariate regression model, including all previously mentioned significant variables, was explained by wait time.

This study is not without limitations. Unfortunately, the correlations we found cannot prove causation. Although providers who post biographies are rated higher, this does not necessarily mean that posting an “about” section will increase a score. Also, patients that post on PRWs might not be representative of the population as a whole. While some providers had only one or two reviews posted on their profile, we believe our data set is large enough to account for any volatility. Going forward, a double-blinded study asking patients to rate profiles with randomly assigned characteristics might be beneficial to further validate these findings.

In conclusion, we provide some evidence that dermatologists may improve patient experience through optimizing their presence on PRWs, employing MLPs, and providing a low-wait time.

Mr Tye is a medical student at Joe R. & Teresa Lozano Long School of Medicine at University of Texas Health Science Center at San Antonio (UT Health San Antonio). Mr Smith is a medical student at Joe R. & Teresa Lozano Long School of Medicine at UT Health San Antonio. Mr Shaffer is a medical student at Joe R. & Teresa Lozano Long School of Medicine at UT Health San Antonio. Mr Khan is a medical student at Joe R. & Teresa Lozano Long School of Medicine at UT Health San Antonio. Mr Franks is a medical student at Joe R. & Teresa Lozano Long School of Medicine at UT Health San Antonio. Dr Shin is a second-year resident physician in the department of family and community medicine at UT Health San Antonio.

Disclosure: The authors report no relevant financial relationships.

References

1. Hanauer DA, Zheng K, Singer DC, Gebremariam A, Davis MM. Parental awareness and use of online physician rating sites. Pediatrics. 2014;134(4):e966-e975. doi:10.1542/peds.2014-0681

2. de Groot IB, Otten W, Dijs-Elsinga J, Smeets HJ, Kievit J, Marang-van de Mheen PJ; CHOICE-2 Study Group. Choosing between hospitals: the influence of the experiences of other patients. Med Decis Making. 2012;32(6):764-778. doi:10.1177/0272989X12443416

3. Namavar AA, Marxzynski V, Choi YM, Wu JJ. US dermatology residency program rankings based on academic achievement. Cutis. 2018;101(2):146-149.

4. Dinh M, Walker A, Parameswaran A, Enright N. Evaluating the quality of care delivered by an emergency department fast track unit with both nurse practitioners and doctors. Australasian Emerg Nurs J. 2012;15(4):188-194. doi:10.1016/j.aenj.2012.09.001

5. Smith RJ, Lipoff JB. Evaluation of dermatology practice online reviews: lessons from qualitative analysis. JAMA Dermatol. 2016;152(2):153-157. doi:10.1001/jamadermatol.2015.3950

6. Emmert M, Meier F. An analysis of online evaluations on a physician rating website: evidence from a German public reporting instrument. J Med Internet Res. 2013;15(8):e157. doi:10.2196/jmir.2655

7. Smith E, Clarke C, Linnemeyer S, Singer M. What do your patients think of you? An analysis of 84,320 reviews in ophthalmology. Ophthalmology. 2020;127(3):426-427. doi:10.1016/j.ophtha.2019.10.016