Hospital Star Wars: Sounding Off on the New CMS Star Ratings

In late July, the Centers for Medicare & Medicaid Services (CMS) finally published their highly anticipated hospital quality star ratings system after months of pressure from industry stakeholders and Congress delayed its release. The controversial ratings metric has been lauded by consumer advocacy groups and contested by hospital associations. On one side of the issue, advocates claim the system will finally give patients an easy-to-use tool for determining medical care decisions, while the opposition argues that the system’s flawed methodology oversimplifies and distorts actual quality measures.

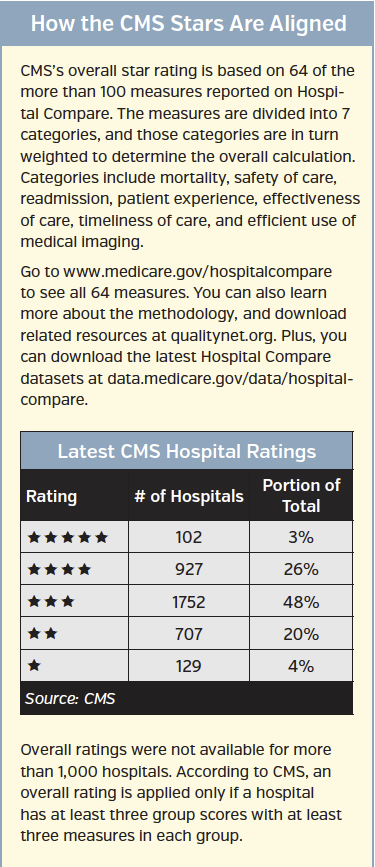

Formally known as Hospital Compare, the program is CMS’s attempt to rate hospitals using 64 quality measures across seven categories to form an overall score using a 5-star rating.

In order to understand the CMS hospital ratings system from the perspective of managed care professionals, First Report Managed Care spoke with multiple experts in the field, and the response was unanimously skeptical. Most experts expressed an understanding of the motivation behind releasing such a system but felt that CMS failed in the execution. They also expressed concerns regarding the system’s simplicity, transparency, practicality, usefulness, and whether or not it would actually have any impact on consumer choices.

These observations and questions might reveal an underlying belief that Hospital Compare is perhaps another bureaucratic program that yields borderline satisfactory work due to insufficient investment, inefficiencies, average competency, or a combination of these and other factors. But one chief medical officer we spoke with said he believes CMS knows exactly what it is doing—and it is doing it by design.

Donald Rucker, MD, CMO of Premise Health, raised an issue about the rating system that others, including the American Hospital Association (AHA), have brought up: The system mashes quality measures into a single rating which may not result in or represent meaningful distinctions between hospitals.

“It is very artificial to take what is an absolute measure and put it on an ordinal scale,” he said.

According to Dr Rucker, the rankings could potentially end up being based on trivial differences between institutions, instead of factors associated with quality of care.

Dr Rucker suggested that this could be a product of CMS’s intention.

“[CMS] ultimately doesn’t care about how specific hospitals fare against each other,” he said. “It is just trying to inject accountability into the system. But the problem is that a lot of what they are injecting may turn out to be closer to random than what is ideal.”

Trivial Measures Making Big Differences

Dr Rucker explained that the systems methodology could cause a splay in the comparative ratings, even when comparing facilities that are essentially identical. He noted that differences in quality may not exist to the degree conveyed by such a simple rating.

A letter signed by several groups, including AHA, refers to Hospital Compare’s medical imaging quality measure as an example. The letter highlighted that the differences in the ratings in this category between 1-star hospitals and 5-star hospitals were not statistically significant. The letter points out that “CMS cannot tell the difference in performance among hospitals on Efficient Use of Medical Imaging, and yet the agency includes those measures in the star ratings.”

Other experts, including Gary Owens, MD, president of Gary Owens Associates, a medical management and pharmaceutical consulting firm, agree with this sentiment.

“CMS is on the right path, but star ratings work better for restaurants and movies,” Dr Owens said. “Unfortunately, cooking down quality of care to star ratings [may be] too general to help make meaningful choices for most patients.”

Norm Smith, president of Viewpoint Consulting, Inc, which surveys managed markets decision-makers for the pharmaceutical industry, said he wonders if the ratings are too simplistic. “Are they granular enough to focus on specific areas that, measured over time, truly improve the quality of care in hospitals?”

Some experts defended the intent behind the star ratings system, but still acknowledged flaws in its design. Barney Spivack, MD, national medical director of Medicare case and condition management at Optum, a health services and innovation company, said he agrees that separation in quality is easier to see when comparing a 1-star and 5-star hospital; however, these distinctions becomes hazy among the middling hospitals.

“There may be far fewer differences and very little spread between 2- and 4-star performers,” Dr Spivack explained. “There may not be validity in the rankings.”

Unfair Comparisons

Among other concerns, experts pointed out the problem cause by grouping all hospitals together, regardless of type. They highlighted that this could be unfair to medical centers that serve poorer populations and complex patients.

“The very fact that some of the nation’s best-known hospitals with the highest of ratings on other assessments… are slated to receive a small number of stars from CMS should make one question the validity and soundness of the methodology,” notes the aforementioned jointly-signed letter.

Among these facilities, Ochsner Medical Center in New Orleans is nationally ranked in 3 adult specialty areas by US News and World Report’s America’s Best Hospitals rankings. Moreover, it is rated as a high performer in 7 other areas and listed as the top hospital in both the city of New Orleans and the state of Louisiana; yet, it received only 2 stars from CMS.

Additionally, US News and World Report ranked Georgetown University Hospital as the top medical center in Washington, DC, gave it a high national ranking in endocrinology, and rated it as a high performer in 3 other adult specialty areas; yet, it garnered only a single star from CMS.

It is probably not a coincidence that both Ochsner and Georgetown serve inner city populations who are presumably poorer as a group than those served by typical suburban hospitals. Moreover, in Georgetown’s case, its high national ranking in diabetes could be a double-edged sword, since diabetes care can be complex and involve multiple comorbidities. The fact that Georgetown presumably cares for more complex patients is a measure that may be negatively impacting its CMS ranking.

Socioeconomic Factors Matter

“It appears that CMS’s process is not adjusted for any socioeconomic factors,” offered Jennifer Christian, MD, president of Webility Corp, which advises organizations on workers compensation and disability benefits. She explained that the risk adjustment for hospital readmissions considers only the patient’s age, risk factors, and the underlying risk of readmission of the practitioner delivering care.

“No wonder the hospitals in small Midwestern communities have more 4- and 5-star rankings,” she said. “Those communities tend to be stable and ethnically less diverse. The underlying total well-being and social structure of their served populations is better to begin with.”

David Claud, MD, chief medical officer at Activate Healthcare, said that socioeconomic factors are a significant limitation of the measures.

“If measures are not properly risk-adjusted, then their utility in comparing relative hospital effectiveness is significantly diminished,” he said.

Dr Claud referred to a 2014 study published in Health Affairs that showed those living in high-poverty areas were 24% more likely than others to be readmitted. The study also found that married couples—presumably due to built-in support systems—have a substantially lower risk of readmission.

“The evidence suggests socioeconomic status is an important determinant of readmission rate, and as such, the limitations of readmission measures that don’t adjust for this need to be understood,” Dr Claud said.

Mr Smith said that he wonders why separate scales aren’t used for teaching and safety-net hospitals. “If CMS can profile hospitals [separately] to allow them into the 340B drug discount program, why can’t they do it here?”

Dr Spivack agreed, stating that teaching hospitals shouldn’t be compared with private institutions.

“Teaching institutions need to be compared to each other—not placed in the same mix with small private facilities that can get good results with a more selective patient population,” he said.

Some Hospitals Fare Better

However, Dr Spivack countered that even though Hospital Compare seems unfair to teaching and safety-net hospitals, “some have achieved very good results despite their vulnerable patient base.”

Among teaching hospitals, Atlanta’s Emory University Hospital, Chicago’s Rush University Medical Center, and Dallas’ Baylor University Medical Center all achieved 4-star rankings, as did safety-net hospital Presence Saints Mary and Elizabeth Medical Center in Chicago. Critics emphasized that these appear to be exceptions. Data released by CMS in July showed safety-net hospitals ranked lower as a group, with 2.88 average stars, than their non-safety-net counterparts at 3.09 stars.

When examining hospitals, regardless of type, trends do tend to show that higher Hospital Compare ratings are linked with lower mortality and readmissions. A research letter published earlier this year in JAMA Internal Medicine found this to be the case in an analysis involving nearly 3100 hospitals. The researchers analyzed data from Hospital Compare to identify 30-day mortality and readmission rates for acute myocardial infarction, pneumonia, and heart failure, adjusting for both patient and hospital characteristics. Results showed that the mortality rate at 5-star hospitals was 9.8%, vs 10.4%, 10.5%, 10.7%, and 11.2% for 4-, 3-, 2-, and 1-star hospitals, respectively. They also found that the same was seen for readmission rates, with 5-star hospitals readmitting 18.7%, vs 20.2%, 21%, 21.8%, and 22.9% for 4-, 3-, 2-, and 1-star hospitals, respectively.

The researchers concluded that “choosing 5-star hospitals may be driving patients to better institutions.”

Do Patients Really Choose Their Hospital?

Both Dr Claud and Dr Owens questioned the core idea behind the star ratings system, which is to give patients better information when they are choosing their medical care. They suggested that most hospital patients rarely choose the hospital they present to, based on anything other than location, undermining the intent of the star ratings system.

In medical emergencies, the choice is usually out of the patient’s hands, explained Dr Owens. Even if the patient wants to move afterwards, “the choice is often made [by the] attending physician, not the patient.”

He added that Hospital Compare may have value in elective surgery, “but even then, the general process is to choose a physician first, and the subsequent choice of hospital is limited to facilities where the chosen physician has clinical privileges.”

Dr Claud expanded by stating that surgery decisions are usually made based on preferred surgeon, not hospital.

“When you need a knee replacement, you don’t just go to a hospital and order one off the menu,” he said. “You pick an orthopedic surgeon.”

According to Dr Claud, while Hospital Compare can give patients an idea about surgically related complications, it cannot help select a surgeon. “You can’t tell from [the Hospital Compare] website if the orthopedic surgeon who is doing your knee is the surgeon at the hospital with the lowest or the highest complication rate,” he said.

Dr Claud noted that the value of Hospital Compare is buried and may be difficult for patients to tease out. For example, in obstetrics, Hospital Compare measures the percentage of deliveries that were 1 to 2 weeks early even though a scheduled delivery was not medically necessary.

“Shining the light on hospitals that are delivering moms too early is very valuable,” he explained. “That said, I don’t think too many people will sort through the data to get to that level.”

Thus, he continued, a pregnant woman might choose a 4- or 5-star hospital with a poor obstetrical measure, even though she’d be better off at a 2- or 3-star center with a good obstetrical measure.

What’s Next?

Hospital Compare is not going away, so the near-term answer might be better consumer education.

According to Dr Spivack, “patients will need help using this data sensibly and reasonably,” and CMS should “point out some of the uncertainties and assumptions that were made so that consumers know its limitations.”

Dr Christian emphasized that CMS must “acknowledge the imperfections in the measurement methodology and the efforts made to work around them.”

As for what lies ahead, Dr Rucker fears that hospital executives will begin “optimizing to the goals, even if the better thing lies in a different direction.” He added that “[the system] will be gamed. That’s human nature.”

He also predicts that hospitals will hire statisticians who understand the Hospital Compare data and can home in on the one or two numbers driving the overall ranking.

While potentially beneficial, the star system raises questions, Dr Owens said. He noted that, ultimately, measured areas are likely to improve but fears that this may not improve patient care.

“The key question is [does this] actually improve patient outcomes in the long run?” He also noted that increased focus on measured items might worsen outcomes in other areas. “Only time will tell if this is the case,” he said.

Catherine Cooke, PharmD, research associate professor at the University of Maryland School of Pharmacy, suggested looking at CMS’s Part D star ratings to see how so-called “gaming” can play out.

“Actions are taken to increase the star rating, but these actions, which result in improved numbers used in the calculations, may not have a positive impact on patients or their care,” she said.

For example, dispensing medication in 90-day supply increments often improves an adherence measure but not necessarily a patient’s actual adherence, explained Dr Cooke. Auto-refill programs can also help increase adherence measures, “but during several home pharmacy visits, I’ve seen stockpiles of medication from auto-refills that would last the patient over a year.” This can lead to medication waste, or worse, inappropriate use—even under the guise of an improved-performance measure, she said.

“The focus ends up on the stars,” Dr Cooke concluded, “not the larger picture of quality.”