The Future of Electrophysiology: Using Mixed Reality During Cardiac Ablation Procedures

For decades, virtual reality (VR) has been heralded as a transformative technology, shaping the way users engage with all aspects of life, from entertainment, to retail, and even in healthcare. Recent advances in technology miniaturization have led to an expansion in available hardware devices and rapid development of the extended realities (XR) that have finally achieved satisfactory performance for use in clinical settings. Our team has developed an XR system to assist cardiac electrophysiologists (EPs) during electrophysiology studies and catheter ablation procedures.1,2 The system, called the Enhanced Electrophysiology Visualization and Interaction System (also known as ELVIS, now being marketed as CommandEP by SentiAR Inc.) was invented at Washington University in Saint Louis and is now undergoing further development and commercialization by SentiAR, Inc.

Understanding the Extended Realities

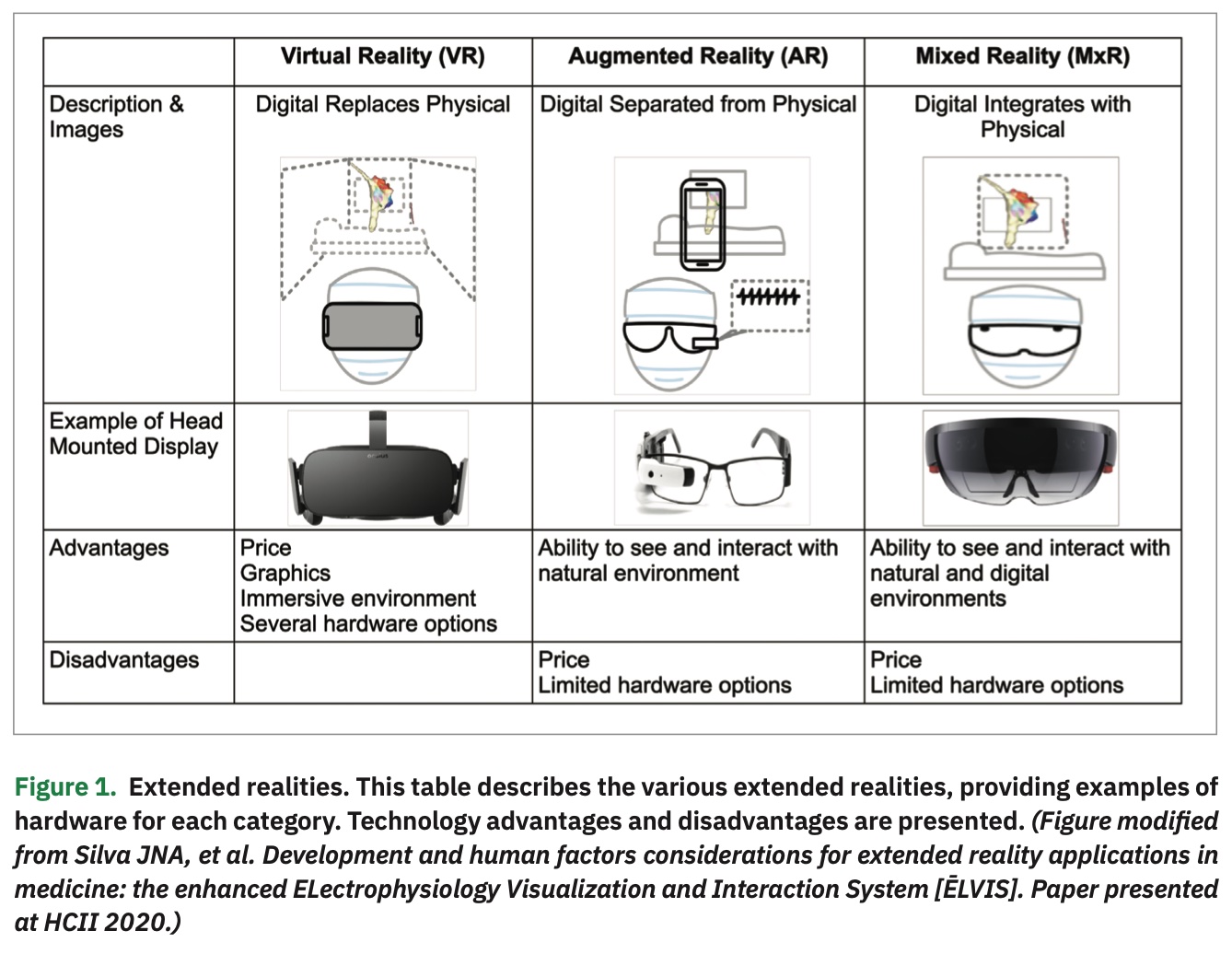

XR represents a continuum of the mixture of digital and natural/physical realities, and can use different technologies to introduce the user to various levels of digital augmentation. For the purposes of this discussion, we will focus on head-mounted displays (HMDs) that provide a mixed reality (MxR) display, allowing the viewer to visualize an anchored 3-dimensional digital image within their natural environment (Figure 1). In contrast, VR provides a completely immersive experience where the user wears an HMD and has a completely immersed visual, and often auditory, experience. Widely available VR headsets have exceptional displays and are relatively low cost at $300-$750/device. However, fully immersive VR devices remove the user’s ability to have a meaningful interaction with their natural environment. While this may not impact certain rehabilitation applications designed for patient use, this represents a significant limitation for intra-procedural use.

The advent of augmented reality (AR) was a leap in XR capability, allowing the user to remain in their natural environment while viewing computational images. For example, a physician could view images such as x-rays, CT scans, or angiograms next to the patient to assist in medical decision making. In 2015,3 AR was used during percutaneous coronary intervention (PCI), and the user posted a CT angiogram in their field of view during PCI to help guide therapy. With the addition of widely available MxR technologies in 2015, which brought anchored 3D holographic-like images, HMD wearers had the additional capability of interacting more meaningfully with those digital overlays. These images could be rotated, moved, increased in size or contrast, and anchored in 3D space in a more intuitive way. We believed that this moved the technology readiness assessment of MxR to favor proceeding with intra-procedural applications.

Developing CommandEP

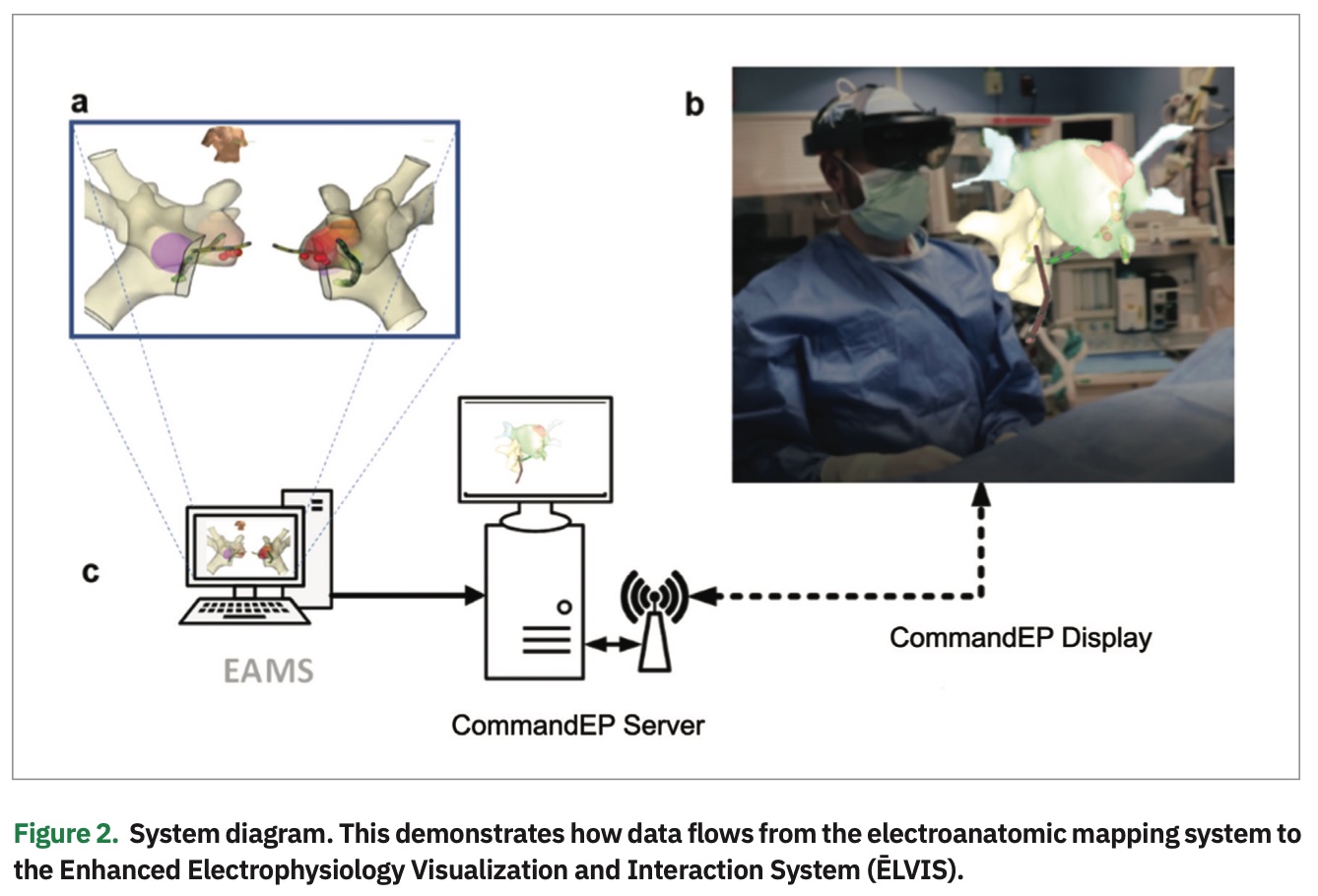

We believed that the 3D digital overlay in MxR could be used to display currently generated electroanatomic mapping system (EAMS) map data in true 3D, providing sterile, real-time, and direct control of that data to EP physicians. To achieve this, we created a system that could incorporate information from an EAMS into our proprietary software loaded on off-the-shelf hardware, including a Microsoft HoloLens HMD (Figure 2). This system allowed us to provide a real-time 3D hologram of the patient’s unique and specific electroanatomic substrate, as well as display real-time catheter electrode locations. In addition to improved visualization, the system provides EP physicians with the critical ability to control and manipulate models as needed to enhance their understanding of the substrate.

Due to the novelty of this technology, there were no pre-existing MxR performance standards. As such, standards from standard medical imaging (AAPM-TG18-QC)4 along with best practices in human-computer interface5 were adapted and modified to create performance metrics for intra-procedural MxR use. Early observational data6 was obtained from simulated data, bench testing using a wet-lab setup, and the Engineering ELVIS (E2) study. In the E2 observational study, patients (n=10) who were scheduled to have an electrophysiology study as part of their clinical course had a secondary team observing the procedure through the MxR headset. The CommandEP system was able to achieve an average frame rate of 53.3±5.4 frames/second (range 30-60 frames/second) with an end-to-end system latency of 68±42 ms.6 Battery runtime was determined to be 234±17.6 minutes. Geometric distortion was measured across multiple images and poses, resulting in a mean error of 0.52±0.31% SD (well below the imaging standard of less than 2% to 5%).6 Lighting conditions did influence perceived color, with more color hues perceived in darker rooms.

Creating an intuitive and easy-to-use interface was a unique challenge, dependent on understanding the user needs and the environmental limitations. Early iterations of the system relied on a gaze-gesture method for selection that required the user to gaze over at the button of interest, and then point and click with a hand motion to “select” the button. However, with the target use case of intra-procedural use, early human factors testing revealed that the interface needed to be “hands free” to allow the user to keep their hands on the catheter during the procedure. After exploring various options, a gaze-dwell method of selection was created that allows the user to make slight head motions to hover a virtual cursor over a target button, and hold their gaze (or “dwell”) over the button to activate or “select” that feature. Given the rapid developments in natural language processing (including expansion of language/accent libraries) and pupillary eye tracking, more sophisticated methods of navigational control may soon be possible.

Clinical Testing

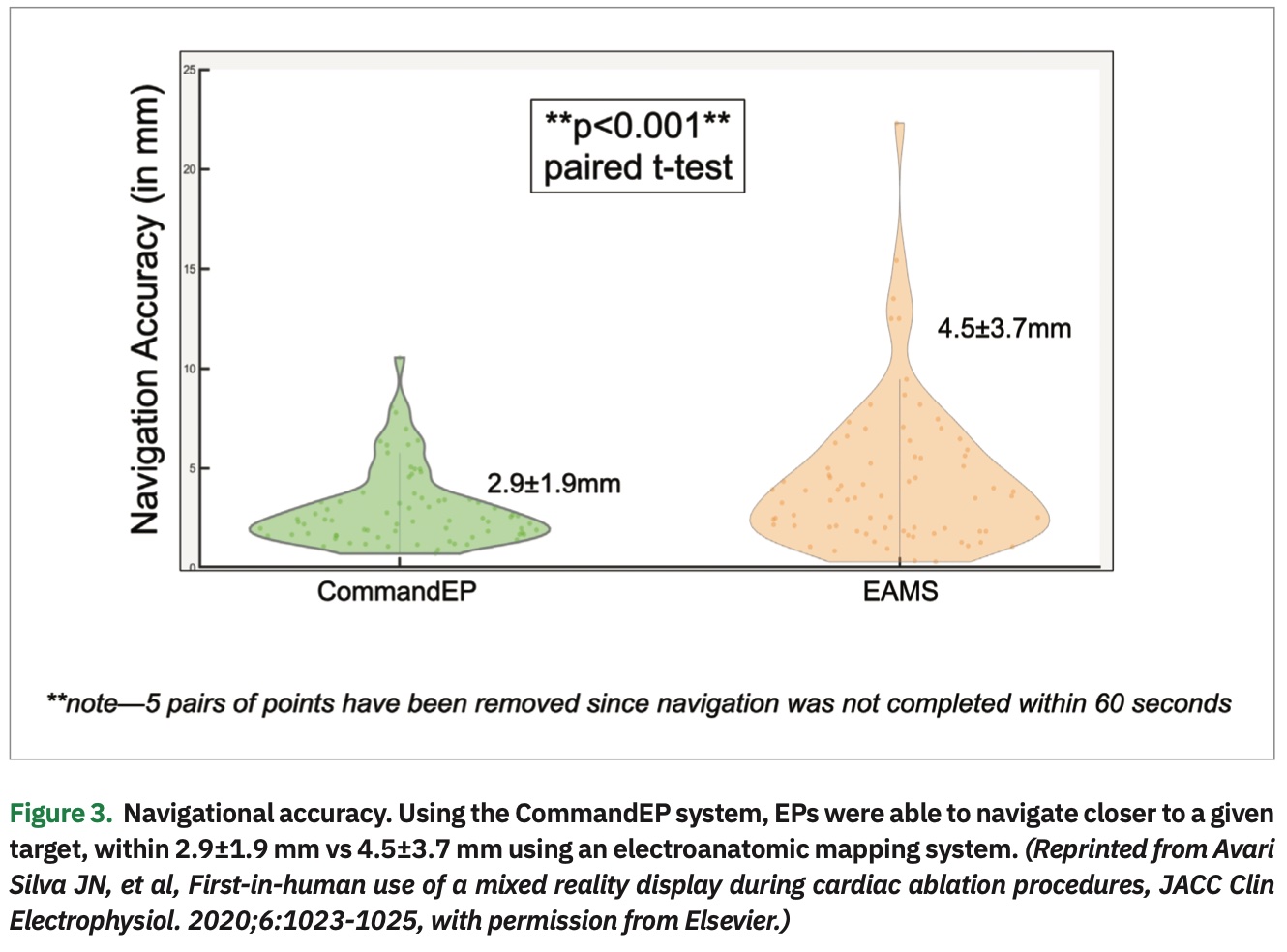

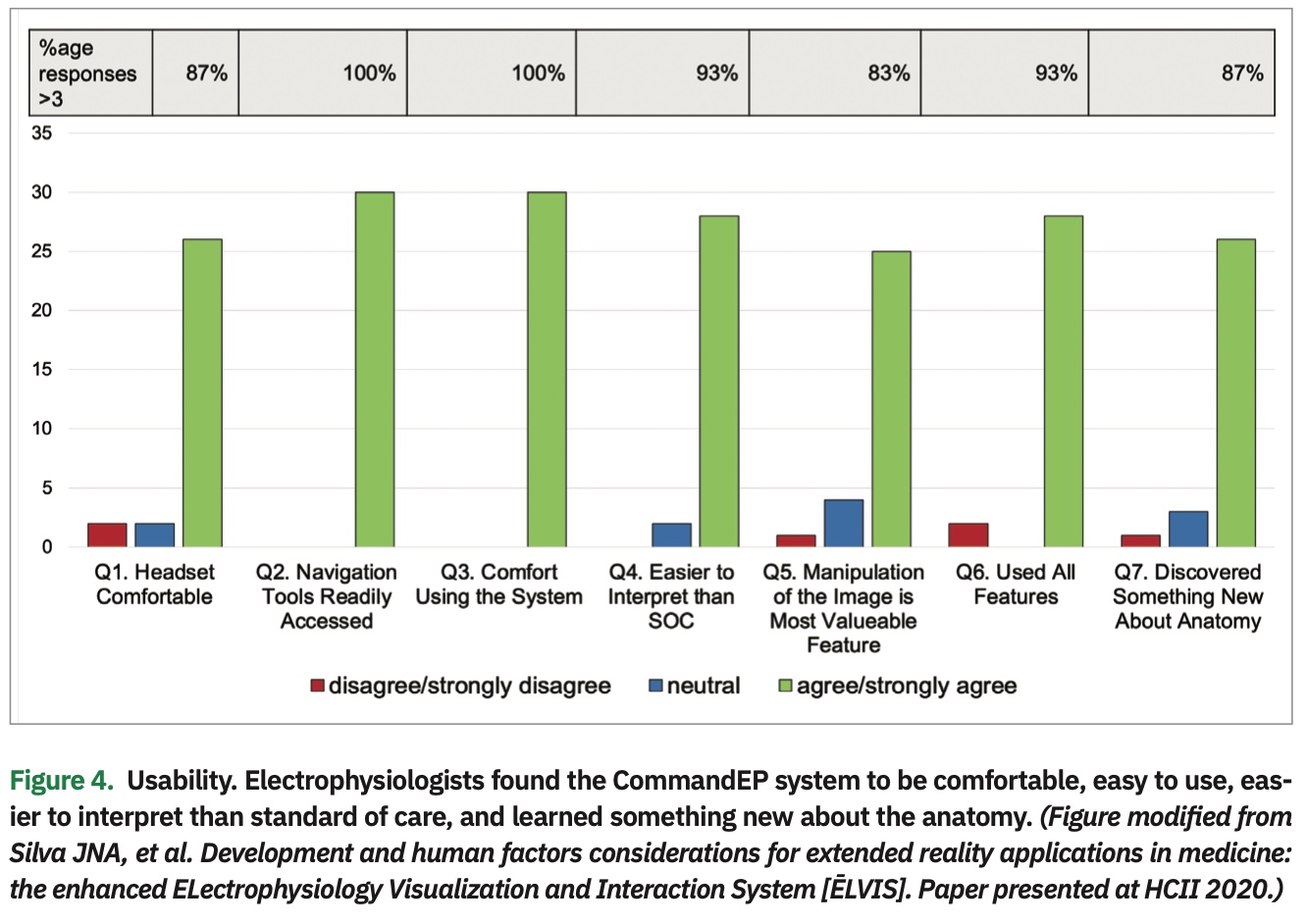

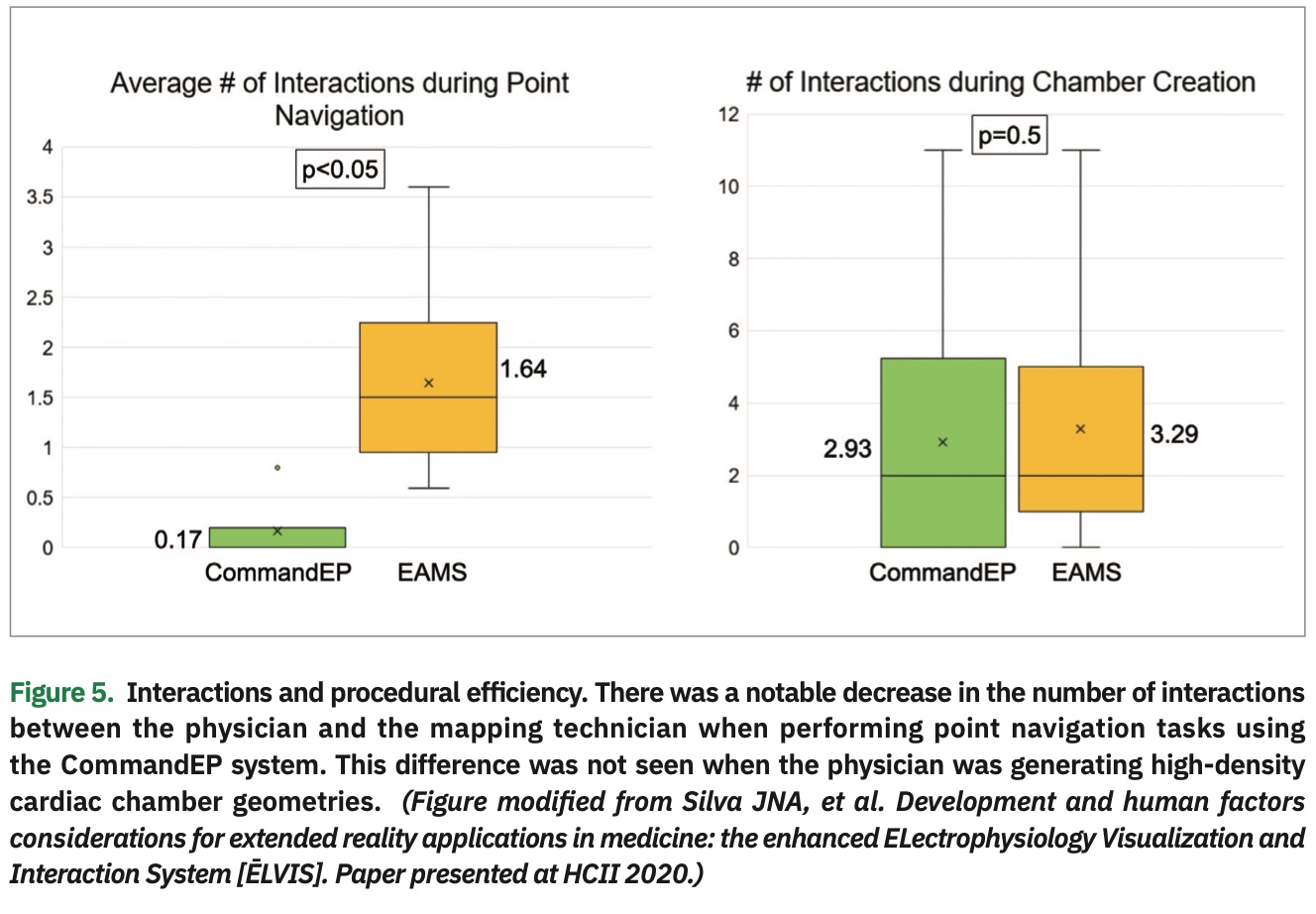

After a fully developed and validated system was completed, the Cardiac Augmented Reality (CARE) Study was undertaken to better understand the impact of this kind of technology on EP procedures.7 The 3 endpoints for this study included: (1) navigational point accuracy, (2) usability, and (3) workflow (as measured by number of physician-mapper interactions). During the study, the physicians performed a series of study tasks during the post-procedural waiting phase of the ablation procedure. These tasks included: (1) creating a full, high-density cardiac chamber using any catheter (time limited to a maximum of 5 minutes), and (2) navigating to 5 geographically distinct “targets” marked in the EAMS with lesion markers (time limited to a maximum of 60 seconds/point). Once the physician felt they had reached the given target, a second marker was placed in the EAMS and navigation accuracy was calculated as the Euclidean distance between points. The EAMS provided (x,y,z) coordinates for each point. Each of these tasks were carried out under 2 different conditions, using current standard of care, including EAMS or using the CommandEP system. During these study tasks, a research coordinator located inside the EP lab marked each “ask” or “order” that the physician directed to the mapping technician during the study tasks. Post procedure, physicians completed an “exit survey” to capture usability of the system.

The data showed that physicians were more accurate navigating to a target when using the CommandEP system than compared to standard EAMS, within 2.9±1.9 mm vs 4.5±3.7 mm (P<.001) (Figure 3). Usability data, as assessed though the post-procedure exit survey, demonstrated that EPs agree/strongly agree with these statements: the headset was comfortable (87%), navigation tools were readily accessible (100%), they were comfortable using the system (100%), data were easier to interpret than current standard of care (93%), manipulation was the most important feature (83%), users interacted with all the features (93%), and users discovered something new about the anatomy (87%) (Figure 4). Lastly, there was a significant decrease in the number of interactions between physician and mapping technician when the physician was completing point navigation tasks (.17 vs 1.64 interactions, P<.05), although there was no significant change in the number of interactions during chamber creation (2.93 vs 3.29 interactions, P=.5).8 (Figure 5)

Future Directions

Clinical validation of emerging XR systems should focus on demonstrating clinical benefit that will result in improved patient care. Our testing shows that these technologies can now be developed to meet the performance metrics necessary for comfortable, clinical intra-procedural use and, when deployed in a clinical setting, provide value to the end user for usability, procedural efficiency, and navigational accuracy. Further studies are required to establish meaningful use and to validate these findings in a broader user base during more procedures. Ongoing development for integrating innovative XR interfaces as well as other integral aspects of the EP study, including electrograms and ultrasound, are also underway.

Disclosures: Dr. Avari Silva reports grants and non-financial support from SentiAR, and non-financial support from Abbott/St. Jude Medical, during the conduct of the study. Outside the submitted work, she reports grants and non-financial support from SentiAR, as well as support from Cardialen and Abbott. Dr. Silva is a co-Founder of SentiAR, serves on the SAB, and is a consultant to the company. Mr. Southworth reports he is a co-Founder, shareholder, and employee of SentiAR. Dr. Silva reports grants and non-financial support from SentiAR, during the conduct of the study. Outside the submitted work, he reports grants and non-financial support from SentiAR. Dr. Silva is also a co-Founder of SentiAR, serves on the SAB, and is a consultant to the company. In addition, a patent for the System and Method for Virtual Reality Data Integration and Visualization for 3D Imaging and Instrument Position Data is issued and licensed to SentiAR. Patents have also been issued for Augmented Reality Display Sharing and for Gaze Based Interface for Augmented Reality Environment.

- Silva JNA, Southworth M, Raptis C, Silva J. Emerging applications of virtual reality in cardiovascular medicine. JACC Basic Transl Sci. 2018;3:420-430.

- Silva J, Silva J, inventors. System and method for virtual reality data integration and visualization for 3D imaging and instrument position data. U.S. patent application US20180200018A1. July 19, 2018. https://patents.google.com/patent/US20180200018A1/en

- Opolski MP, Debski A, Borucki BA, Szpak M, Staruch AD, Kepka C, Witkowski A. First-in-man computed tomography-guided percutaneous revascularization of coronary chronic total occlusion using a wearable computer: proof of concept. Can J Cardiol. 2016;32:829 e11-e13.

- Online only report no. OR03 - Assessment of display performance for medical imaging systems. 2005. https://www.aapm.org/pubs/reports/detail.asp

- Cox K, Privitera MB, Alden T, Silva J, Silva J. Augmented reality in medical devices. In: Privitera MB, ed. Applied Human Factors in Medical Device Design. Elsevier; 2019:327-337.

- Southworth MK, Silva JNA, Blume WM, Van Hare GF, Dalal AS, Silva JR. Performance evaluation of mixed reality display for guidance during transcatheter cardiac mapping and ablation. IEEE J Transl Eng Health Med. 2020;8:1900810.

- Avari Silva JN, Southworth MK, Blume WM, et al. First-in-human use of a mixed reality display during cardiac ablation procedures. JACC Clin Electrophysiol. 2020;6:1023-1025.

- Silva JNA, Privitera MB, Southworth MK, Silva JR. Development and human factors considerations for extended reality applications in medicine: the enhanced ELectrophysiology Visualization and Interaction System (ĒLVIS). Paper presented at HCII 2020.